AI Interaction Platform

Machine learning

Unity ml-agents

Nov 2019 on going

About

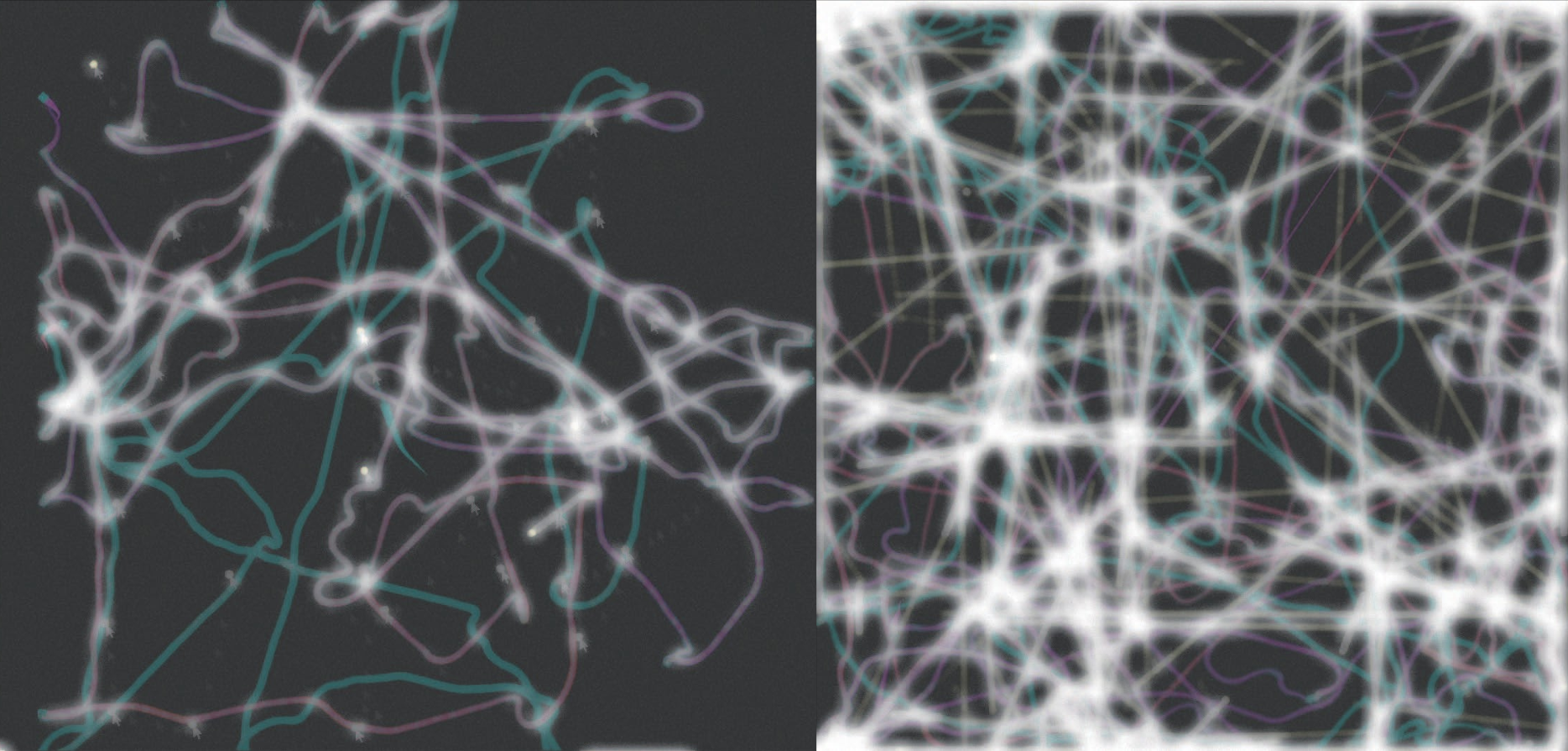

It’s part of my working-in-progress project about research of Human-AI interaction. It’s a machine learning agents-based sound generation program. Unlike other machine learning algorithms that generate sound directly like Wavenet or Magenta, It uses machine learning agents which were trained to complete simple tasks in the Unity3D environment. It used an analogy of how people interact with biological systems like feeding pigeons or goldfish. In this project the audience will play with life-like artificial intelligence.

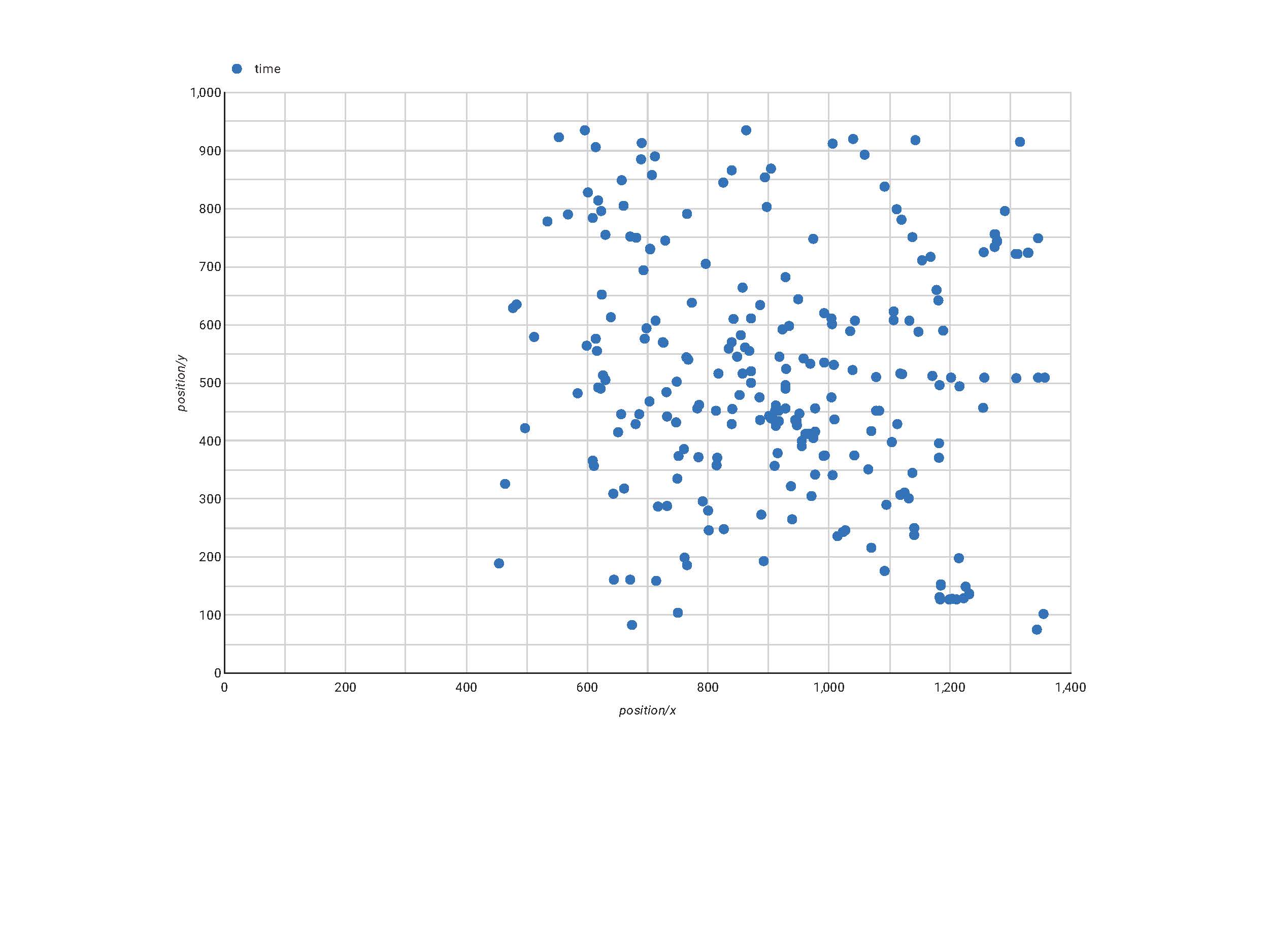

Visitors can place dots by clicking. By doing that they can determine how high and how many notes there will be in the space but when the notes will be played is decided by AI agents. There are sound triggers on the dots. According to dots’ position, each time the agent collects them there will be different sound tones generated. Rach agent has different sound characters with different synth waves. By playing with it, the mouse clicking data will be collected anonymously.

Dots generated

Interacable on PC or iPad

Data visulization

Royal College of Art WIP show

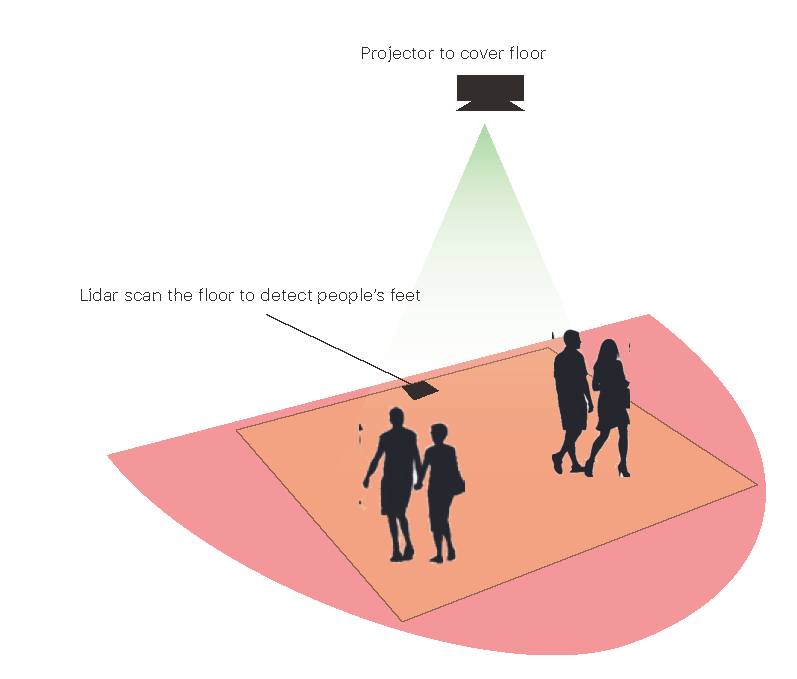

Lidar interaction